Table of contents

As global enterprises accelerate their shift toward AI chatbot solutions to streamline operations, many still assume that a chatbot is nothing more than an “automated responder.”

The truth is far more complex.

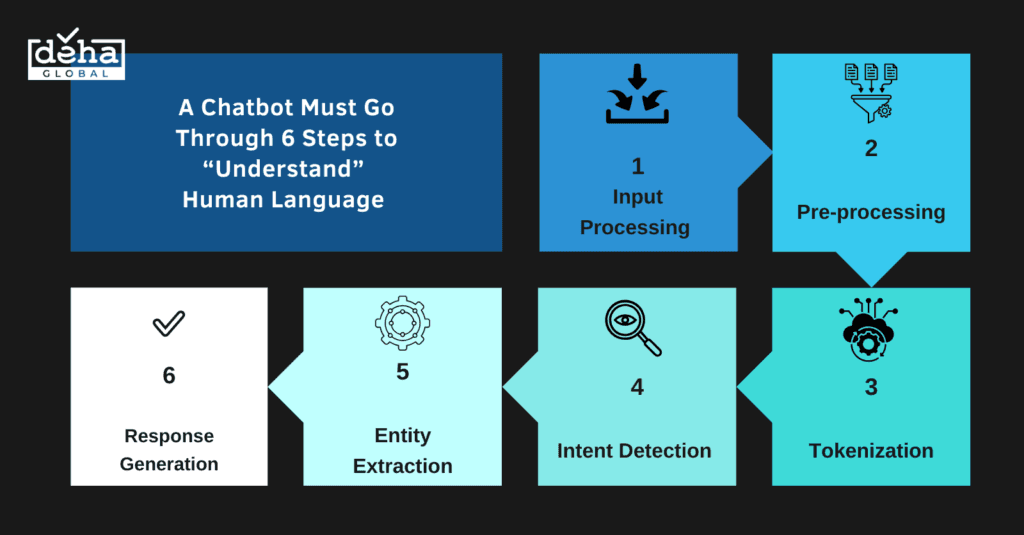

A well-designed enterprise AI chatbot must pass through six distinct NLP (Natural Language Processing) stages before it can produce an accurate response. This is why some chatbots deliver intelligent, human-like interactions—while others degrade customer experience.

From the perspective of an AI implementation specialist, understanding these six steps helps businesses:

This pipeline reflects industry-standard NLP practices used in large-scale systems developed by Google, OpenAI, Amazon Lex, and Meta AI, as consolidated from sources such as Stanford NLP and ACL publications.

The chatbot receives input from the user—text, voice, or system-generated data.

At this stage, the system ensures the input is captured accurately to reduce downstream errors.

If the input is flawed, every subsequent step will suffer.

This step includes:

The goal is to prepare clean, consistent input so the model can interpret it correctly.

The chatbot breaks sentences into smaller units (“tokens”) so the model can understand each component.

This technique is foundational in modern AI systems and is widely used in WordPiece (Google), SentencePiece (Meta), and GPT tokenization models.

Example:

“Payment of order 5001”

→ [“payment”, “of”, “order”, “5001”]

The chatbot determines what the user wants to accomplish:

This step accounts for nearly 70% of a chatbot’s overall accuracy in enterprise environments.

The chatbot identifies key information within the message:

Example:

“Check order status 5029 delivered to Vietnam”

→ Intent: Track order

→ Entities: order_id = 5029, location = Vietnam

The chatbot produces the appropriate action or reply based on:

A reliable enterprise chatbot must maintain accuracy, brand voice consistency, and contextual awareness.

Based on publicly available documentation from KLM and IBM, their approach closely aligns with industry-validated NLP practices.

KLM handles an enormous volume of customer inquiries across multiple platforms each week. This led to:

They deployed an NLP pipeline featuring multi-level intent detection and aviation-specific entity extraction.

The system also integrates directly with flight data to deliver real-time updates.

DEHA Global brings together an experienced team of AI and Data engineers with hands-on expertise in NLP, LLMs, and enterprise systems.

We partner with international businesses to build AI chatbot solutions anchored on three core strengths:

If your goal is to build an enterprise-grade AI chatbot that is intelligent, efficient, and cost-optimized, DEHA Global is ready to support you at every stage.